|

8.1 Introduction to surveys

8.2 Methodological approaches

8.3 Doing survey research

8.4 Statistical Analysis

8.4.1 Descriptive statistics

8.4.2 Exploring relationships

8.4.3 Analysing samples

8.4.3.1 Generalising from samples

8.4.3.2 Dealing with sampling error

8.4.3.3 Confidence limits

8.4.3.4 Statistical significance

8.4.3.5 Hypothesis testing

8.4.3.6 Significance tests

8.4.3.6.1 Parametric tests of significance

8.4.3.6.2 Non-parametric tests of significance

8.4.3.6.2.1 Chi-square test

8.4.3.6.2.2 Mann Witney U test

8.4.3.6.2.3 Kolmogorov-Smirnov Test

8.4.3.6.2.3.1 One sample Kolmogorov-Smirnov test

8.4.3.6.2.3.2 Two sample Kolmogorov-Smirnov test

8.4.3.6.2.3.3 Alternative method of testing maximum divergence using a chi-square approximation

8.4.3.6.2.3.4 Why it is necessary to have ordinal categories?

8.4.3.6.2.3.5 The chi-square test or the Kolmogorov-Smirnov test?

8.4.3.6.2.3.6 A note on the Moses test of extreme reactions

8.4.3.6.2.4 H test

8.4.3.6.2.5 Sign test

8.4.3.6.2.6 Wilcoxon test

8.4.3.6.2.7 Friedman test

8.4.3.6.2.8 Q test

8.4.3.7 Summary of significance testing and association: an example

8.4.4 Report writing

8.5 Summary and conclusion

When to use the Kolmogorov-Smirnov test

Unworked examples

When to use the Kolmogorov-Smirnov

The Kolmogorov-Smirnov (K-S) test is a test of two samples to see whether they come from the same population.

The K-S test is used to test whether a sample comes from a population with a known (or theoretical) distribution, or to test whether two samples come from the same population, i. e. whether two samples differ significantly.

The test may be carried out on samples of any size, provided the samples are independent, and sample data is of ordinal scale.

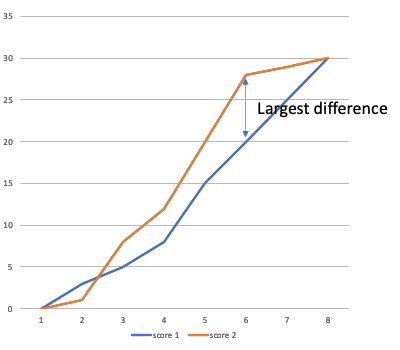

The test compares sample cumulative frequency distributions for significant difference. For example:

The test is performed in different ways for each of the following situations:

- One sample test, all sample sizes.

- Two sample test for small samples, one or two tail test. Both small samples must be of the same size.

- Two sample test for large samples.

The difference in the two sample cases occurs only in the testing statistic and critical value.

For the two sample test small samples are those of size 30 or less.

The one sample case will be considered first and then the two aspects of the two sample case.

Top

8.4.3.6.2.3.1 One sample Kolmogorov-Smirnov test

Hypotheses

What we need to know

Sampling distribution

Assumptions

Testing Statistic

Critical values

Worked examples

Hypotheses

H0:There is no significant difference between the observed and theoretical distributions.

HA:There is a significant difference between the observed and theoretical distributions.

Restricted to a two-tail test.

Top

What we need to know

To perform the test we need to know or be able to derive the frequency distributions of the sample for a range of ordinal categories, (the more the better). In addition, we must be able to derive a theoretical distribution of expected frequencies for the same categories from the null hypothesis. This is analgous to the derivation of expected frequencies in the chi-square test (see Section 8.4.3.6.2.1).

Top

Sampling distribution

The Kolmogorov-Smirnov test is a comparison of cumulative frequencies. The sampling distribution is the distribution of differences between cumulative frequencies.

In the one sample case, the comparison is between the sample cumulative frequency and the cumulative frequency derived from the expected frequencies of the null hypothesis.

The cumulative frequencies are then inspected to find the point of maximum divergence. The maximum divergence is then compared with the Kolmogorov Smirnov maximum random divergences. The K-S maximum divergencies form the limits of the sampling distribution. They are the divergences that could pertain, at a given level of confidence, if the sample differs from the theoretical distribution as a result of sampling error.

Consequently, if the maximum difference between the observed sample cumulative distribution and the expected theoretical distribution exceeds the K-S maximum divergence, then there is asignificant difference between the two distributions, and the null hypothesis (which generated the theoretical distribution) must be rejected.

Before the cumulative frequencies are calculated, it is necessary that the frequency categories are ordinal. If they are not, then the test becomes inapplicable, as nominal categories may be shuffled around to produce a false significant divergence (See Section 8.4.3.6.2.3..4 for an example).

Top

Assumptions

The K-S Test involves no assumptions relating to the shape of the populations from which the samples are drawn.

Top

Testing statistic

The formula for the testing statistic for the K-S test is

K = MAXIMUM |ORCF-ERCF|

Absolute values are denoted by straight line brackets and relate solely to the magnitude of the enclosed variables. In other words any minus sign is ignored.

K is the name of the testing statistic for a K-S one sample test. It is equal to the maximum absolute divergence between the observed relative cumulative frequency (ORCF) and the expected relative cumulative frequency (ERCF).

The cumulative frequencies are in relative terms so that they are in the same units, i.e. range from zero to one.

Thus:

ORCF = Observed cumulative frequency divided by total frquency

ERCF = Expected cumulative frequency divided by total frquency

By observed (or expected) cumulative frequency, we refer to the cumulative frequency at each class interval, not just the final cumulative frequency. If we merely considered the final cumulative frequency then the relative cumulative frequency would always be equal to one. (See worked example below.)

Top

Critical values

The tables of critical values are given by Table 8.4.3.6.2.3.1. The maximum random divergences at significance levels of 5% and 1% are given for samples of size 1 to 30.

To find the value look up sample size and read off for relevant significance level.

For samples exceeding size 30, the maximum divergence is approximated by dividing specified absolute values (the last row of the table) by the square root of the sample size. For example, at a 5% significance level with a sample size of 100, the critical value would be 1.36 divided by square root of 100 = 1.36/10 = 0.136.

The decision rule is to reject the null hypothesis if the calculated K exceeds the critical value.

Table 8.4.3.6.2.3.1 K-S critical values for one sample test

n |

0.05 Sig |

0.01 Sig |

5 |

0.57 |

0.67 |

6 |

0.52 |

0.62 |

7 |

0.49 |

0.58 |

8 |

0.46 |

0.54 |

9 |

0.43 |

0.51 |

10 |

0.41 |

0.49 |

11 |

0.39 |

0.47 |

12 |

0.38 |

0.45 |

13 |

0.36 |

0.43 |

14 |

0.35 |

0.42 |

15 |

0.34 |

0.4o |

16 |

0.33 |

0.39 |

17 |

0.32 |

0.38 |

18 |

0.31 |

0.37 |

19 |

0.30 |

0.36 |

20 |

0.29 |

0.36 |

25 |

0.27 |

0.32 |

30 |

0.24 |

0.29 |

Over 30

Divide by √n |

1.36/√n |

1.63/√n |

Top

Worked example of one-sample K-S test

It was contended that an increase in turnout at a General Election would help the Labour Party.

A random sample of 35 seats was selected from the 163 where turnout was up in 1966. The Table below is a frequency table showing the number of the sample seats with a 'swing' below and above the national average. For example, one seat had an average swing of over 3% greater than the national average. Does this data support the contention that increased turnout favours the Labour Party?

Variations in percentage swing to Labour around national average

| |

-3% or less |

-1.5% to -2.9% |

-0.5% to -1.4% |

+0.5% to -0.5% |

0.5% to 1.4% |

1.5% to 2.9% |

3% or more |

Total |

| Seats where turnout up |

2 |

3 |

6 |

9 |

10 |

4 |

1 |

35 |

Note: the total number of seats in each of the categories were about equal.

We may carry out a one sample K-S test on this data. The categories are ordinal and we can derive an expected cumulative frequency. The expected frequencies are derived from the null hypothesis that the increased turnout does not effect swing. In which case we would expect the 35 seats with increased turnout to be evenly distributed between the seven categories. The expected frequencies would therefore be 5 in each category.

Hypotheses:

H0:There is no significant difference between the observed and expected cumulative frequency distributions

HA: The cumulative frequency distributions differ signifcantly.

This is a two-tail test of significance.

Significance level: 5%

Testing statistic:

K = MAXIMUM |ORCF-ERCF|

Critical value: From tables of critical K values (Table 8.4.3.6.2.3.1):

K= 1.36/√n = 1.36/√35 = 0.23

Decision rule: reject H0 if calculated K>0.23

Computation:

Variations in percentage swing to Labour around national average: computations

| |

-3% or less |

-1.5% to -2.9% |

-0.5% to -1.4% |

+0.5% to -0.5% |

0.5% to 1.4% |

1.5% to 2.9% |

3% or more |

Total |

| Seats where turnout up Observed frequency |

2 |

3 |

6 |

9 |

10 |

4 |

1 |

35 |

| Observed cumulative frequency |

2 |

5 |

11 |

20 |

30 |

34 |

35 |

|

| Observed relative cumulative frequency (ORCF) |

2/35 |

5/35 |

11/35 |

20/25 |

30/35 |

34/35 |

35/35 |

|

| Expected frequencies |

5 |

5 |

5 |

5 |

5 |

5 |

5 |

35 |

| Expected cumulative frequency |

5 |

10 |

15 |

20 |

25 |

30 |

35 |

|

| Expected relative cumulative frequency (ORCF) |

5/35 |

10/35 |

15/35 |

20/35 |

25/35 |

30/35 |

35/35 |

|

| Absolute relative difference |

3/35 |

5/35 |

4/35 |

0 |

5/35 |

4/35 |

0 |

|

So

k=MAXIMUM |OCRF-ECRF| = 5/35 = 1/7 = 0.143

Decision: Cannot reject the null hypotheses (H0) as calculated K<0.23. The data does not support the contention.

Top

8.4.3.6.2.3.2 Two sample Kolmogorov-Smirnov test

Hypotheses

What we need to know

Sampling distribution

Assumptions

Testing Statistic

Critical values

Computational procedures

Worked examples

Hypotheses

H0:The two samples are from identical populations.

HA:The two samples are from different populations. Two-tail test

HA:The values of the population from which one of the samples is drawn have a larger error term than the values of the population from which the other sample was drawn. One-tail test

Top

What we need to know

We need to know the frequency distributions of the two samples over the same class intervals, so that the cumulative frequencies may be found.

Top

Sampling distribution

The Kolmogorov-Smirnov test compares cumulative frequency distributions.

The sampling distribution for the two sample test is the maximum differences between all the possible randomly selected pairs of sample cumulative frequencies. This is similar to the one sample case but differs in as much as both samples are prone to sampling error and therefore larger differences between distributions may be attributed to sampling error.

If the maximum difference between the two observed sample cumulative frequency distributions exceed the Kolmogorov Smirnov maximum random divergencies, then the two samples are significantly different.

Top

Assumptions

The K-S Test involves no assumptions relating to the shape of the populations from which the samples are drawn.

Top

Testing statistic

The testing statistic is different depending on sample size. For small samples (below n=30) the samples must be the same size.

For two small samples (of equal size) K = MAXIMUM |ORCF1-ORCF2|

Where

ORCF1 is observed cumulative frequency of sample 1

ORCF2 is observed cumulative frequency of sample 2

Note that when both samples are of the same size then there is no need to calculate relative cumulative frequencies because this would merely involve us in dividing both sets of cumulative frequencies by the same number each time.

As will be seen below, the table of critical values is adapted so that it is not necessary to find the relative cumulative frequency, the simple difference in actual cumulative frequencies is sufficient for small samples.

Top

Critical values

As with the testing statistic, the critical values are found differently for small and large samples.

Two small samples:

Table 8.4.3.6.2.3.2 provides critical values of K for samples of size 5 to 30 for one- and two-tail tests at 1% and 5% significance levels. The appropriate significance level is selected. Reading down the column, the size of the sample is located. The critical value is then found by reading across to the first column of the table.

For example, a 5% two-tail test for samples of 19: Read down the column marked 0.025, 19 is in the 18–22 group. Read across to the first column, critical value equals 9. Note that this is an absolute value and it is not the relative value.

Table 8.4.3.6.2.3.2 K-S critical values for two small samples

| Critical values |

0.05 Signifcance |

0.025 Signifcance |

0.01 Signifcance |

0.005 Signifcance |

| 4 |

5 |

|

|

|

| 5 |

6–8 |

5–6 |

5 |

5 |

| 6 |

9–12 |

7–9 |

6–8 |

6–7 |

| 7 |

13–16 |

10–13 |

9–10 |

7–9 |

| 8 |

17–21 |

14–17 |

11–14 |

10–12 |

| 9 |

22–27 |

18–22 |

15–17 |

13–15 |

| 10 |

28–30 |

23–27 |

18–21 |

16–19 |

| 11 |

|

28–30 |

22–26 |

20–23 |

| 12 |

|

|

27–30 |

24–27 |

| 13 |

|

|

|

28–30 |

| |

|

|

|

|

Top

Two large samples (of any size over 30):

The critical value for the two large sample case is found as follows. Select the appropriate multiplier from Table 8.4.3.6.2.3.3 for the stated significance level and substitute it into the following formula, where M stands for multiplier

Critical K =M√((n1 + n2)/(n1)(n2))

Thus for a 5% level of significance for a two-tail test with samples of 50 and l00, critical K would be

1.36√((50 + 100)/(50)(100)) = 1.36√150/5000 = 1.36√(3/100) = 1.36√0.03 = 0.2356

The null hypothesis is rejected if the calculated K exceeds the critical value.

Table 8.4.3.6.2.3.3 K-S critical value multiplier for two large samples

| Signifcance level |

0.05 |

-0.25 |

0.01 |

0.005 |

| Multiplier |

1.22 |

1.36 |

1.51 |

1.63 |

Top

Worked example of two-sample K-S test

1. Two small samples

The economics teacher at a certain school claims that the best economics students are those who are also taking mathematics at 'A' level. The 'A' level economics results gained by thirty students are shown in the table below, fifteen of them attempted mathematics at 'A' level while the other half took non-mathematical subjects.

Test the economics teacher's claim at a 5% significance level.

| Grade of Economics A level |

A |

B |

C |

D |

E |

o |

Fail |

Total |

| Maths Students |

2 |

6 |

3 |

1 |

1 |

1 |

1 |

15 |

| Non-maths students |

1 |

3 |

6 |

0 |

3 |

0 |

2 |

15 |

A simple parametric test is not viable as it would mean assuming some kind of numerical evaluation of the gradings. While such an evaluation is possible if the relevant data is available, it is inapplicable here given the limited data above. A chi-square test is also inapplicable as none of the expected frequencies would exceed 5 if we assume that the two groups provide results which are not significantly different. Combining categories would not provide us with a meaningful or desirable test particularly as the K-S test for two small samples can be carried out on the data. It is a simple matter to derive the cumulative frequencies for both samples and both samples are of the same size, a necessity for the K-S small samples test.

Hypotheses:

H0: The two samples are from identical distributions; mathematics students do not achieve significantly

better results than non-mathematics students.

HA: Maths students achieve better results.

This is a one-tail test of significance.

Significance level: 5%

Testing statistic: For two small samples (of equal size)

K = MAXIMUM |OCF1-OCF2| (Note: not relative as no need as sample same size.)

Critical value: Derived from Table 8.4.3.6.2.3.2 for a one tail test at 5% for a sample size of 15. Critical value equals 7

Decision rule: reject H0 if K>7.

Computation:

See table below:

| Grade of Economics A level |

A |

B |

C |

D |

E |

o |

Fail |

Total |

| Cumulative frequency Maths Students (sample 1) |

2 |

8 |

11 |

12 |

13 |

14 |

15 |

15 |

| Cumulative frequency Non-maths students (sample 2) |

1 |

4 |

10 |

10 |

13 |

0 |

15 |

15 |

| Difference |

1 |

4 |

1 |

2 |

0 |

1 |

0 |

|

K = MAXIMUM |OCF1-OCF2| = 4

Decisions: Cannot reject H0 as calculated K is less than the critical value. There is no significant difference in the two sets of results.

Top

2. Two large samples

Two samples, one of 50 men and the other of 40 women, were interviewed to ascertain the use they made of public transport in Birmingham. The results are summarised in the table below.

| Gender/Grades |

A |

B |

C |

D |

E |

Totals |

| Male |

8 |

2 |

3 |

2 |

35 |

50 |

| Female |

14 |

6 |

10 |

6 |

4 |

40 |

A: To and from work, at least 10 journeys a week

B: 6 to 9 journeys a week on average

C: 3 to 5 journeys a week on average

D: One or two journeys a week

E: Rarely, if ever use public transport

Test the hypothesis that there is no significant difference in usage between sexes.

A parametric test is inapplicable as the categories are not easily converted to interval scale data.

A chi-square test would be possible, except for the relatively high number of small expected frequencies. Combining categories would loose the precision of the data.

The K-S two sample test is the most appropriate as the data is clearly ordinal.

The large sample two-tail signifcance tests is the one used as the samples exceed 30.

Hypotheses:

H0:There is no significant difference in usage between men and women.

HA: There is a significant difference.

This is a two-tail test of significance.

Significance level: 5%

Testing statistic:

K = MAXIMUM |ORCF1-ORCF2|

Critical value:

Derived from Table 8.4.3.6.2.3.3 the multiplier = 1.36 and the samples are 50 and 40 respectively

So: M√((n1 + n2)/(n1)(n2))

=1.36√((50 + 40)/(50)(40)) = =1.36√((90)/(2000) =0.288

Decision rule: reject H0 if calculated K (using relative cumulative frequencies)>0.288.

Computation:

See table:

| Gender/Grades |

A |

B |

C |

D |

E |

Totals |

| Male cf (observed 1) |

8 |

10 |

13 |

15 |

50 |

50 |

| ORCF1 (i.e. divide by 50) |

.16 |

.20 |

.26 |

.30 |

1.00 |

|

| Female cf (observed 2) |

14 |

20 |

30 |

36 |

40 |

40 |

| ORCF2 (i.e. divide by 40) |

.35 |

.50 |

.75 |

.90 |

1.00 |

|

| Differences |

.19 |

.30 |

.49 |

.60 |

0 |

|

Therefore K= MAXIMUM |ORCF1-ORCF2| = 0.6

Decision rule: reject H0 as calculated K greater than the critical value of 0.288. There is a significant difference in usage of public transport between men and women in Birmingham.

Top

8.4.3.6.2.3.3 Alternative method of testing maximum divergence using a chi-square approximation

This alternative method applies to the two sample cases for all sample sizes provided a one-tail test is being carried out. It may be applied to two samples of different sizes and is therefore useful for small samples when n1 does not equal n2. However, especially for small samples, the approximation tends to be in the conservative direction. [There are other ways of dealing with two small samples when n1 does not equal n2 but the chi-square approximation is the simplest and most practical.]

The method used is as follows. Calculate

K = MAXIMUM |ORCF1-ORCF2| as in the examples explained above. Then calculate a value of chi-square (χ2) from the following formula:

χ2 = 4K2((n1.n2)/(n1+n2))

reject the null hypotheis if calculated χ2 exceeds critical χ2 for 2 degrees of freedom

Example: Does having a university degree result in a more highly rated occupation by the age of 25? A sample of 25 year-old degree holders and non-degree holders were asked about their occupation and were categorised into five groups:

A: unskilled; B: semi-skilled; C: skilled manual; D: non-professional non-manual; E: professional and executive.

The sample is shown in the following table:

| Degree/Occupation |

A |

B |

C |

D |

E |

Totals |

| Non-degree |

30 |

46 |

33 |

71 |

20 |

200 |

| Degree |

5 |

8 |

13 |

46 |

28 |

100 |

To test the claim we could carry out several tests.

First, we could derive the proportion of non-degree holders whose occupation is classed as manual and then carry out a two sample z-test of proportions by comparing the proportion with that of degree holders. A the z-test of proportions effectively splits the data solely into two groups, hence there is a loss of information.

We could also carry out a χ2 test on the data, as all the conditions are fulfilled but it is a statistically less powerful test than the K-S test.

Finally, a K-S test may be carried out on the data as it has been divided into ordinal categories.

Hypotheses:

H0:There is no significant difference in occupation between degree holders and non-degree holders.

HA: There is a significant difference.

This is a two-tail test of significance.

Significance level: 5%

Testing statistic:

χ2 = 4K2((n1.n2)/(n1+n2)), where

K = MAXIMUM |ORCF1-ORCF2|

n1 = size of sample 1

n2 = size of sample 2

Critical value:

Derived from tables of chi-square critical values: χ2 for two degrees of freedom at 5% significance level is 5.99

Decision rule: reject H0 if calculated χ2>5.99

Computation:

See table:

| Degree/Occupation |

A |

B |

C |

D |

E |

Totals |

| Non-degree |

30 |

46 |

33 |

71 |

20 |

200 |

| Degree |

5 |

8 |

13 |

46 |

28 |

100 |

| Observed cf non-degree (sample 1) |

30 |

76 |

109 |

180 |

200 |

|

| ORCF1 (i.e. divide by 200) |

.15 |

.38 |

.545 |

.90 |

1.00 |

|

| Observed cf degree (sample 2) |

5 |

13 |

26 |

72 |

100 |

|

| ORCF2 (i.e. divide by 100) |

.05 |

.13 |

.26 |

.72 |

1.00 |

|

| Differences |

.10 |

.25 |

.285 |

.18 |

0 |

|

Therefore K= MAXIMUM |ORCF1-ORCF2| = 0.285

χ2 = 4K2((n1.n2)/(n1+n2)) = 4(0.285)2(200.100)/(200+100) = 4(0.285)2(20000)/300 =21.66

Decision: reject H0 as calculated χ2>5.99

Top

8.4.3.6.2.3.4 Why it is necessary to have ordinal categories?

To illustrate the necessity of having data that may be ranked as ordinal categories, consider the following example of the application of a K-S test to nominal data.

Example : Two painters had samples of their work viewed to see if there was a significant difference in predominant colour. The results are in the table below.

As we have two equal size samples of 25, a small sample K-S test would appear appropriate. Thus we calculate the cumulative frequencies for both samples and calculate the differences between the two cumulative frequencies. The maximum difference is 12, hence K = 12. From Table 8.4.3.6.2.3.3 the critical value for

a two tail, 95% test is 10. The calculated K exceeds 10, hence we reject the null hypothesis, there is a significant difference between the two samples.

| Artist/colour |

Red |

Blue |

Green |

Brown |

Others |

Totals |

| Artist 1 |

10 |

5 |

2 |

3 |

5 |

25 |

| Artist 2 |

1 |

2 |

10 |

7 |

5 |

25 |

| Observed cumulative frequency 1 |

10 |

15 |

17 |

20 |

25 |

|

| Observed cumulative frequency 2 |

1 |

3 |

13 |

20 |

25 |

|

| Differences |

9 |

12 |

4 |

0 |

0 |

|

Therefore K= MAXIMUM |ORCF1-ORCF2| = 12

However, there is no need, or indeed reason, to put the categories in the order "Red", "Blue", "Green", "Brown", "Others". Suppose we just move one colour to a different part of the order, so that the categories are ordered thus: "Red", "Brown", "Blue", "Green" , "Others".

The table below

shows the calculation of K for this new (equally reasonable) ordering. As can be seen, the maximum difference is 9, therefore calculated K is less than critical K, hence our decision is reversed. This means that the test is invalid because of a lack of consistency when categories can be ordered in any way, i.e. when categories are not ordinal.

| Artist/colour |

Red |

Brown |

Blue |

Green |

Others |

Totals |

| Artist 1 |

10 |

3 |

5 |

2 |

5 |

25 |

| Artist 2 |

1 |

7 |

2 |

10 |

5 |

25 |

| Observed cumulative frequency 1 |

10 |

13 |

18 |

20 |

25 |

|

| Observed cumulative frequency 2 |

1 |

8 |

10 |

20 |

25 |

|

| Differences |

9 |

5 |

8 |

0 |

0 |

|

Therefore K= MAXIMUM |ORCF1-ORCF2| = 9

Top

8.4.3.6.2.3.5 The chi-square test or the Kolmogorov-Smirnov test?

Where it is possible to use either the chi-square (χ2) test or the Kolmogorov-Smirnov (K-S) test on the same sample data, (for example, the data in example on degrees and ocupation) then it is preferable to

use the K-S Test as this is regarded as the most powerful of the two non-parametric tests.

Besides being statistically more powerful, the K-S test does not lose information because of grouping

as the χ2 test sometimes does. In fact the more class intervals the better for the K-S test. Similar to the χ2

test, the K-S Test is theoretically applicable to continuous data but requires no correction when applied to discrete data because the error resulting is in the 'safe' direction (i.e. will not wrongly reject a null hypothesis). Yates' Correction should be applied to a χ2 test when the number of degrees of freedom is equal to one.

Although the K-S test is preferable to the χ2 test it has one major limitation, that being its ability to consider, at most, only two samples at a time.

Top

8.4.3.6.2.3.6 A note on the Moses test of extreme reactions

The Moses test of extreme reactions essentially measures the differences in variability of two samples. More specifically it assess the difference in extreme scores between control and experimental groups. It is less statistically powerful and far more restricted than the Komogorov-Smirnov test and is therefore not included here.

Top

Unworked examples

1. The average percentage variations in price of tobacco and brewery shares was recorded over a 30 day period, the results are given in the table below. The body of the table gives the number of trading days that the two types of shares fluctuated by the amount indicated in the top row. Do tobacco and brewery shares follow the same market trends?

| Industry/share change % |

Less than -5% |

-3% to -5% |

-3% to -2% |

-1% to -2% |

-1% to +1% |

+1% to +2% |

+2% to +3% |

+3% to +5% |

Over 5% |

| Tobacco |

0 |

0 |

4 |

10 |

6 |

6 |

2 |

1 |

1 |

| Brewery |

2 |

5 |

2 |

4 |

4 |

8 |

4 |

1 |

0 |

Top

2. The table below shows the number of attempts at passing a driving test of 50 women and 80 men at a Driving Test Centre. Is there a significant difference in number of attempts by gender?

| Number of attempts |

1 |

2 |

3 |

4 |

5 or more |

Total |

| Women |

20 |

18 |

3 |

4 |

5 |

50 |

| Men |

30 |

20 |

20 |

2 |

8 |

80 |

Top

3. Two classes at the same school were given an identical mathematics test. The results are sunnnarised in the frequency table below. Does Class A have a significantly better set of grades than Class B?

| Class/grades |

Below 40% |

40% to 49% |

50% to 59% |

60% to 69% |

70% to 79% |

80% to 89% |

90% to 100% |

Totals |

| Class A |

5 |

6 |

9 |

8 |

3 |

3 |

1 |

35 |

| Class B |

8 |

12 |

7 |

0 |

0 |

1 |

2 |

30 |

Top

4. The number of breakages per 100 items at a china factory are given in the table below, where the categories I to V refer to the thickness of the chinaware, (I being the thickest grade). Is there a significant difference in recorded breakages according to the thickness of the china?

| Breakages/thickness |

I |

II |

III |

IV |

V |

Total |

| Number of breakages per 100 items |

4 |

8 |

3 |

5 |

10 |

30 |

Top

5. Two randomly selected samples of workers at a large factory were asked questions designed to place them on a scale of conservatism. Sample A were trade unionists and sample B non-trade unionists. The scale ranged from 1 to 18 the higher the score the less conservative the respondent.

| Sample/scores |

1–3 |

4–6 |

7–9 |

10–12 |

13–15 |

16–18 |

Totals |

| Sample A |

1 |

4 |

5 |

13 |

8 |

5 |

36 |

| Sample B |

2 |

6 |

10 |

10 |

2 |

6 |

36 |

|